Introduction

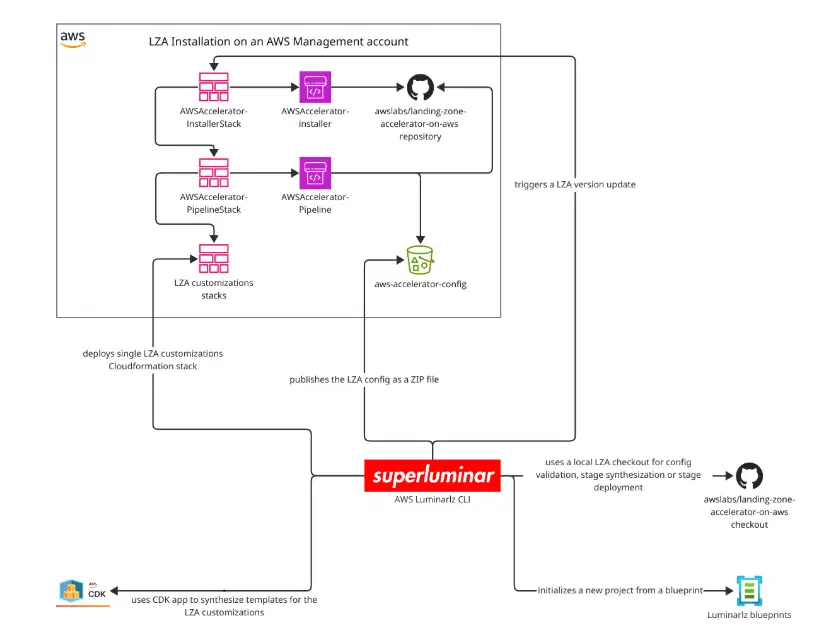

I first heard about the AWS luminarlz CLI in a LinkedIn post. Read the quick announcement here. As a heavy user of the AWS Landing Zone Accelerator, I wanted to give it a shot.

First, I needed to migrate my setup from the deprecated CodeCommit to S3 as the location for config files. Feel free to read about this in my previous post Updating the LZA from CodeCommit to S3.

With that baseline in place, I tested the AWS luminarlz CLI v0.0.44. Read more about this tool in the corresponding blog post from superluminar.

Here’s a quote from their post:

Deploying a well-architected AWS Landing Zone can be challenging, especially for small to mid-size engineering organizations.

The Landing Zone Accelerator on AWS simplifies this process, but managing configurations and customizations can still be complex and repetitive.

To minimize these obstacles for solution architects and builders alike, we developed the AWS Luminarlz CLI. Using the AWS Luminarlz CLI and a TypeScript-based blueprint streamlines configuration, automates common tasks, and enables easier maintenance and customization.

Let’s dive into it.

Challenge

The AWS luminarlz CLI is currently only recommended for greenfield projects, as described in the docs. But what about brownfield setups?

I have an existing setup and want to use the templating benefits:

- Write customization stacks in CDK

- Run pipeline stages in isolation

The question is: how do we adapt a brownfield setup to work with the CLI?

Solution

Here’s my approach using v0.0.44.

Getting started

First, initialize the CLI:

npx @superluminar-io/aws-luminarlz-cli init

This creates a README.md (rename your existing one first, as creation will be skipped otherwise) with a clear structure and template files:

npm i # install dependencies

npm run cli -- synth # create yaml templates

I inspected the generated files in the aws-accelerator-config.out folder and adapted them to match my existing config.

Along the way, I had some clarifying exchanges about implementation details:

- https://github.com/superluminar-io/aws-luminarlz-cli/issues/74

- https://github.com/superluminar-io/aws-luminarlz-cli/issues/130

Configuring config.ts

The generated config.ts file contains TODOs that need to be completed. I kept my old config file and compared it with the templated one to identify differences.

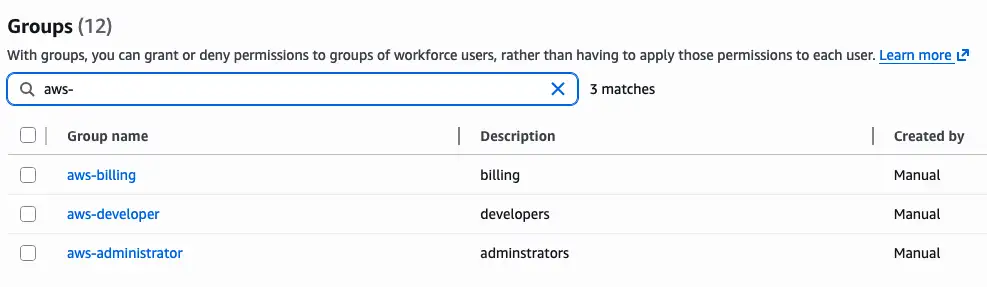

First, in IAM Identity Center, create the groups and add the respective users manually:

Since I use the rootmail feature (more details at the rootmail CDK project and my blog post), I added functions to replicate that behavior.

The great thing is you can create your own TypeScript functions. Here’s my example:

/**

* Add a root mail domain to the email address.

*

* @param prefix the prefix, e.g. aws-roots

* @param subaddress the part after the +

* @param rootMailDomain the root mail domain to add to the email address

* @returns the email address with the root mail domain

*/

function withRootMailDomain(prefix: string, subaddress: string, rootMailDomain: string): string {

return `${prefix}+${subaddress}@${rootMailDomain}`;

}

Simple, but the key point is: you can adapt the TypeScript code however you need.

After completing the config, I repeated this process for every YAML config file, committing after each one.

Iterating on YAML configs

For each config file:

- Run

npm run cli -- lza config synth - Compare your old YAML with the new template and adapt accordingly

- Run

npm run cli -- lza config validatefor immediate feedback

I must say the lza config validate is very handy as it lets you before committing already validate and not in the pipeline (which does it again obviously). AND it also downloads the Landing Zone Accelerator in your current deployed version into a temporary folder. This is nice!

# ...

Cloning into '/var/folders/cn/d8yf4brd3jz2htcnwks90k3c0000gn/T/landing-zone-accelerator-on-aws-release-v1.13.1'...

Cloned landing-zone-accelerator-on-aws repository.

yarn install v1.22.22

# ...

Now run the pipeline

Let’s run the pipeline and see what happens. First error:

❌ AWSAccelerator-PrepareStack-123456789012-eu-central-1 failed: Error: The stack named AWSAccelerator-PrepareStack-123456789012-eu-central-1 failed to

deploy: UPDATE_ROLLBACK_COMPLETE: Received response status [FAILED] from custom resource. Message returned: Max Allowed SCPs for ou "Security" is 5, found

total 6 scps in updated list to attach. Updated list of scps for attachment is PreventExternalSharing,aws-guardrails-SnrgIj,AcceleratorGuardrails1,

FullAWSAccess,AcceleratorGuardrails2,aws-guardrails-YbGlPJ,Max Allowed SCPs for ou "Infrastructure" is 5, found total 6 scps in updated list to attach.

Updated list of scps for attachment is PreventExternalSharing,AcceleratorGuardrails1,FullAWSAccess,AcceleratorGuardrails2,AcceleratorGuardrails3,aws-guardrails-ncjEUa

I reworked the SCPs, and before retrying the pipeline, disabled termination protection on the failed stack. If you don’t, the next update will fail.

Limit increases didn’t work, even though quota code L-E619E033 exists in Service Quotas (AWS console link):

limits:

# Increase the default maximum number of accounts in the organization

- serviceCode: organizations

quotaCode: L-E619E033

desiredValue: 100

regions:

- <% globalRegion %>

deploymentTargets:

accounts:

- Management

I had to disable it for now.

Update the LZA version

I first thought this will not work yet because AWS_ACCELERATOR_INSTALLER_STACK_NAME is hardcoded to 'AWSAccelerator-InstallerStack', and I chose a different name originally.

However the cli config allows overriding it easily:

export const config: Config = {

...baseConfig,

templates,

environments,

managementAccountId: MANAGEMENT_ACCOUNT_ID,

homeRegion: HOME_REGION,

enabledRegions: ENABLED_REGIONS,

awsAcceleratorVersion: AWS_ACCELERATOR_VERSION,

awsAcceleratorInstallerStackName: 'lza' // <- overrides here. Love it

};

I successfully used it to upgrade from v1.12.1 to v1.13.1 🎉

Discovering lza-universal-configuration

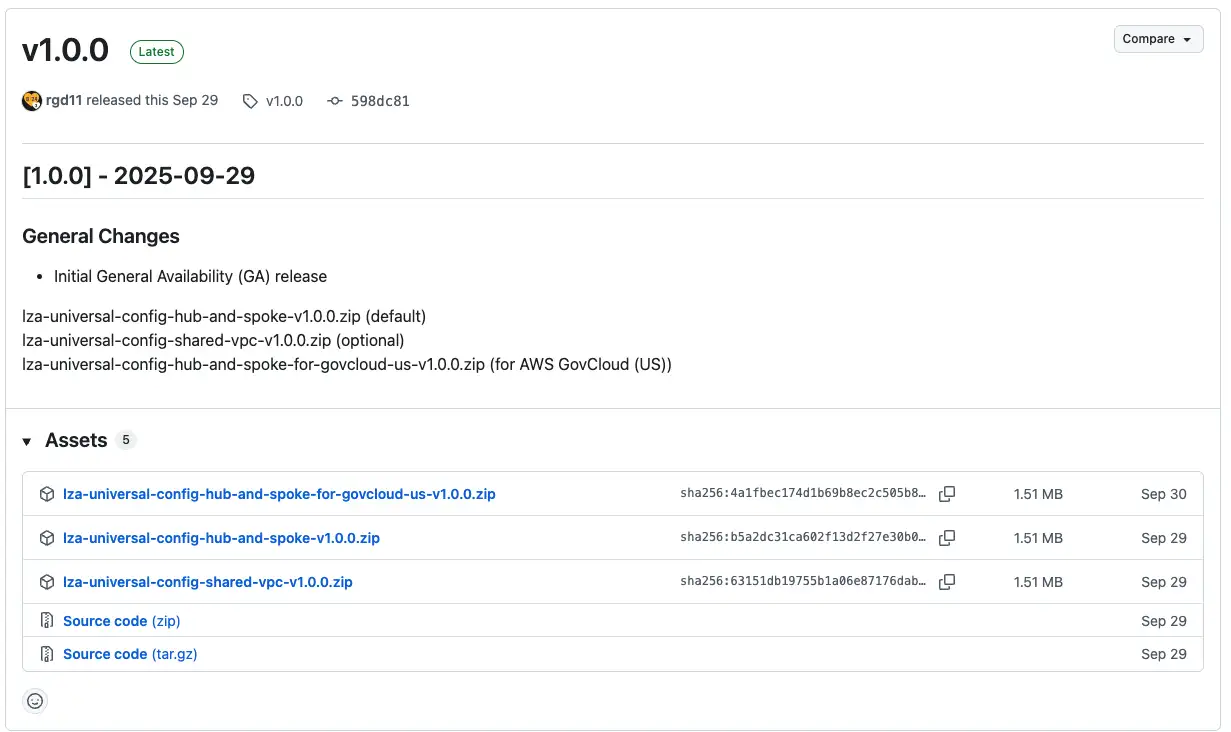

During my research, I found lza-universal-configuration directly from the AWS LZA team:

The LZA Universal Configuration provides enterprise-ready configuration templates that utilize the Landing Zone Accelerator on AWS (LZA) to establish secure, scalable, and well-architected multi-account AWS environments. It enables rapid deployment of baseline environments supporting multiple global compliance frameworks and industry-specific requirements.

First release v1.0.0 was on September 20th, 2025.

I found it hard to navigate the repo and understand how to actually run it. There’s already a PR to improve the docs. They removed the sample configurations since LZA v1.13.x, but you can still find them in the v1.12.6 tag if needed.

After studying the .gitlab-ci.yml, I mimicked the templating process:

cd modules

mkdir temp

mkdir temp/config

cp -r base/default/* temp/config

cp -r network/none/* temp/config

cd ../scripts

npm install

node index.js ../modules/temp/

I started with an empty network configuration in network-config.yaml:

defaultVpc:

delete: true

excludeAccounts: []

transitGateways: []

endpointPolicies: []

vpcs: []

This generated a comprehensive configuration:

modules/temp/

├── accounts-config.yaml

├── backup-policies

│ └── primary-backup-plan.json

├── declarative-policies

│ └── lza-core-vpc-block-public-access.json

├── dynamic-partitioning

│ └── log-filters.json

├── event-bus-policies

│ └── event-bus-policy.json

├── global-config.yaml

├── iam-config.yaml

├── iam-policies

│ ├── sample-end-user-policy.json

│ └── ssm-s3-policy.json

├── network-config.yaml

├── organization-config.yaml

├── rcp-policies

│ └── lza-core-rcp-guardrails-1.json

├── replacements-config.yaml

├── security-config.yaml

├── service-control-policies

│ ├── lza-core-guardrails-1.json

│ ├── lza-core-guardrails-2.json

│ ├── lza-core-sandbox-guardrails-1.json

│ ├── lza-core-security-guardrails-1.json

│ ├── lza-core-workloads-guardrails-1.json

│ ├── lza-infrastructure-guardrails-1.json

│ ├── lza-quarantine.json

│ └── lza-suspended-guardrails.json

├── ssm-documents

│ ├── attach-iam-instance-profile.yaml

│ └── enable-elb-logging.yaml

├── ssm-remediation-roles

│ ├── attach-ec2-instance-profile-remediation-role.json

│ └── elb-logging-enabled-remediation-role.json

├── tagging-policies

│ ├── org-tag-policy.json

│ └── s3-tag-policy.json

└── vpc-endpoint-policies

└── default.json

12 directories, 29 files

Much more verbose than the AWS luminarlz CLI output.

Conclusion

What impressed me most:

npm run cli -- lza config validate- Immediate feedback on config validity- TypeScript customizations - Write customizations in TypeScript instead of YAML

- Isolated pipeline stages - Run stages independently

- ADR templates - Keep architecture decisions close to the code

Further thoughts

What if we combined the two approaches?

The lza-universal-configuration comes directly from AWS and has the latest updates, but the templating mechanism is basic compared to the AWS luminarlz CLI. It would be interesting to see these combined.

Or maybe it’s time for a new AWS luminarlz CLI blueprint…

Like what you read? You can hire me 💻, book a meeting 📆 or drop me a message to see which services may help you 👇