Why

I wanted to have my website all hosted and automatically deployed with a dev subdomain for testing in AWS.

Very often, when I want to learn a new topic, particularly cdk, I grab a personal project and pack as much as possible to learn about the subject. At work on client projects, there is often time and budget constraint, and however, I pace and set the quality standard for my private project.

So let’s dive into it.

The challenge

I set the challenge of having an all-in-one infrastructure-as-code deployment on AWS, meaning

- self-contained, all resources should be on AWS

- a blog with

hugoand a nice theme (in my opinion) - using cdk and cdk-pipelines running in

eu-central-1 - a monorepo with all the code components

- a local development possibility in docker

- which includes building the code in the container with

build.shto test also locally upfront - with a development stage on a

dev.manuel-vogel.desubdomain

With those requirements, I started with an existing and great workshop about cdk from superluminar.

The solution

The workshop mentioned above gave me a very useful baseline and pointed out the ability for a local compilation of the code. In this case, it was a react app.

- The first thing I had to change was switching from a local build of the hugo site to a build in a Docker container.

- Adapting the CloudFront distribution to fixed replies for

403and404errors. - Adapting CloudFront redirects with the following code for the lambda function, where I encountered several roadblocks

- Add a cdk pipeline, which is self-mutating, which was a new concept to me.

- Adding the possibility the secure the

devsubdomain for only a list of defined IP addresses or, in general, make it only available to a known audience

Let’s go into the neat little details of each step!

Building the code

I transferred it to my hugo page by also using the bundling option within a Docker image

new s3deploy.BucketDeployment(this, 'frontend-deployment', {

sources: [

s3deploy.Source.asset(path.join(__dirname, '../frontend'), {

// to avoid mismatch between builds

// we build the assets each time 👇🏽

assetHash: `${Number(Math.random())}-${props.buildStage}`,

assetHashType: AssetHashType.CUSTOM,

bundling: {

// for not hitting DockerHub rate limits from CodeBuild 👇🏽

image: DockerImage.fromRegistry('public.ecr.aws/docker/library/node:lts-alpine'),

command: [

'sh', '-c',

`

apk update && apk add hugo=0.106.0-r3 &&

npm --version && hugo version &&

npm i && npm run build -- --environment ${buildStage} &&

mkdir -p /asset-output && cp -r public-${buildStage}/* /asset-output

`,

],

// because we need to install dependencies

user: 'root',

},

}),

],

destinationBucket: bucket,

distribution,

distributionPaths: ['/*'],

});

I learned that the compiled output needs to be in the /asset-output by convention, and I needed to run it as the root user because of the installation of apk packages, such as the hugo binary.

Furthermore, with the npm run build -- --environment ${buildStage} command, we can tell hugo to use different configuration files, which in this example adapt the baseUrl property, for the subdomain in the development environment.

As a final step, I realized that cdk is so intelligent that it tries to cache local builds. Although the development and production stage are different, cdk took the same artifact as mentioned before. This is why I rebuild it every time by adding a custom hash after talking to Thorsten Hoeger on the cdk.dev slack:

assetHash: `${Number(Math.random())}-${props.buildStage}`,

assetHashType: AssetHashType.CUSTOM,

After those challenges were solved, it was time to build and tackle CloudFront and the redirects for subfolders. Until this point, I was executing cdk locally only.

Fixing the requests on CloudFront

The first thing I noticed was that the errors were not handled correctly.

Handling errors

The first step was to adapt the responsePagePath for this hugo theme, as those are in the /en subfolder, which is the default language of this theme.

const distribution = new cloudfront.Distribution(

this,

'frontend-distribution',

{

certificate: certificate,

defaultRootObject: 'index.html',

domainNames: [siteDomain],

minimumProtocolVersion: cloudfront.SecurityPolicyProtocol.TLS_V1_2_2021,

errorResponses: [

{

httpStatus: 403,

responseHttpStatus: 404,

// adapt to the subpath 👇🏽

responsePagePath: '/en/404.html',

ttl: Duration.minutes(30),

},

{

httpStatus: 404,

responseHttpStatus: 404,

responsePagePath: '/en/404.html',

ttl: Duration.minutes(30),

},

],

},

);

DefaultRootObject in CloudFront

The next obstacle was a bigger one, which is related to the DefaultRootObject behavior of CloudFront. As CloudFront returned 40x errors from S3.

The first attempt was with an Edge Function, which cdk supports in the experimental package, as the function has to be in us-east-1. This would be the smoother solution as I could also code the function in typescript.

However, the correct redirecting did not work.

// Note: infers the filename: static-hosting.redirect-request.ts

const redirectRequest = new lambdaNodeJs.NodejsFunction(this, 'redirect-request');

const redirectFunctionVersion = lambda.Version.fromVersionArn(

this,

'Version',

`${redirectRequest.functionArn}:${redirectRequest.currentVersion.version}`,

)

const redirectRequestEdge = new cloudfront.experimental.EdgeFunction(this, 'redirect-request-cf', {

runtime: lambda.Runtime.NODEJS_14_X,

handler: 'index.handler',

code: lambda.Code.fromAsset(__dirname + '/lambda'),

});

The second attempt was with a cloudfront function, with vanilla javascript based on the documentation, to intercept the cloudfront viewer request and append a fictive DefaultRootObject. In this case /index.html, as follows:

function handler(event) {

var request = event.request;

var uri = request.uri;

// Check whether the URI is missing a file name.

if (uri.endsWith('/')) {

request.uri += 'index.html';

}

// Check whether the URI is missing a file extension.

else if (!uri.includes('.')) {

request.uri += '/index.html';

}

return request;

}

Then I plugged the function into a functionAssociations of the CloudFront distribution

// this works 👇🏽

const cfFunction = new cloudfront.Function(this, 'redirect-request-cf', {

code: cloudfront.FunctionCode.fromFile({

filePath: __dirname + '/lambda/index.js',

}),

});

const distribution = new cloudfront.Distribution(

this,

'frontend-distribution',

{

// other properties...

defaultBehavior: {

origin: new origins.S3Origin(bucket, { originAccessIdentity: cloudfrontOAI }),

compress: true,

allowedMethods: cloudfront.AllowedMethods.ALLOW_GET_HEAD_OPTIONS,

viewerProtocolPolicy: cloudfront.ViewerProtocolPolicy.REDIRECT_TO_HTTPS,

// this worked 👇🏽 🚀

functionAssociations: [

{

eventType: cloudfront.FunctionEventType.VIEWER_REQUEST,

function: cfFunction,

},

],

// this did not work 💥

edgeLambdas: [

{

eventType: cloudfront.LambdaEdgeEventType.VIEWER_REQUEST,

functionVersion: redirectRequestEdge.currentVersion,

// nor this worked 😒

functionVersion: redirectFunctionVersion,

},

],

},

During fixing this issues and learning a lot on the way I discovered an existing contruct for cdk-hugo-deploy on constructs.dev. However, this example expected the static site already compiled, and I wanted to learn as much cdk as possible.

As a next step, I wanted to have everything running in a cdk pipeline. I explain how I did it, what other roadblocks and how I solved them in the next section.

Adding cdk pipelines

How should I design the pipeline?

Multiple Pipelines

My first thought was on having multiple pipelines, meaning having one on the development and one on the master branch, see the following image (generated with cdk-dia):

The question was then, which pipeline should update the other or another abstraction layer? 🤔 I also struggled with the thought of how I’d destroy the development environment after promoting to production.

Single Pipeline

After talking to Johannes Koch and reading the blog, I decided to switch to a single pipeline as shown in the following image:

However, how can I then protect my development environment if I do not destroy it? I decided to go with IP protection, in particular with WAFv2. And here comes the next section.

Having the development environment secured

The first obstacle, next to there is no L2 construct yet for WAFv2, was

For CLOUDFRONT , you must create your WAFv2 resources in the US East (N. Virginia) Region,

us-east-1

I decided to build the rules by hand upfront to understand how WAFs work in detail and what the json of the rules and statements looks like. The solution for

the post-check of the pipeline was to add a custom header

pipepline.addStage(hugoPageDevStage, {

post: [

new pipelines.ShellStep('HitDevEndpoint', {

envFromCfnOutputs: {

URL: hugoPageDevStage.staticSiteURL,

},

// add a custom header which is allowed by the WAF 👇🏽

commands: ['curl -Ssf -H "X-Custom-Header: code-pipeline" $URL'],

}),

],

});

After this was working, I wanted to add the feature to be deployed by the pipeline. This was when I started to dig deeper into the concepts of cdk and its pipelines. Those were the issues I found and helped on my path:

- aws-cdk#6242 as the pipeline should run in one region and deploy separate stacks to other AWS regions by passing in the respective environment. However, this implies that we need to pass the ARN of the WebACL from one stack to another in the pipeline (same account, different region).

- aws-cdk#6056, errors in the spec, however, they were solved

- aws-cdk#7985, the lack of documentation for the WAFv2 constructs.

- I found this blog post with the corresponding code, which helped a lot.

- Then I had to fix CFN cross region references via CustomResources as I got the error

Error: Stack "code-pipeline/dev-stage/hugo-blog-stack" cannot consume a cross-reference from stack "code-pipeline/dev-waf-web/waf-web-stack". Cross-stack references are only supported for stacks deployed to the same environment or between nested stacks and their parent stack. Set crossRegionReferences=true to enable cross-region references

- Unfortunately,

cfnOutputdid work not either, as the next error denotes:

Got an error as `cfnOutput` can not be used between stacks on different regions

- This is explained in the CloudFormation User Guide. Adding

crossRegionReferences: trueto the stacks, as described in the issue, however then dependency cannot cross-stage boundaries, which is in CodePipeline.

This has two possible solutions

- This issue aws-sdk#17725 did not help, as I don’t think adapting the scope should be the solution

- However, as I am using

cdk pipelines,I do not want to edit the codebuild directly. So I decided for using SSM Parameter Store solution from this thread in StackOverflow, which I also updated to the latest version forcdkv2. 🎉

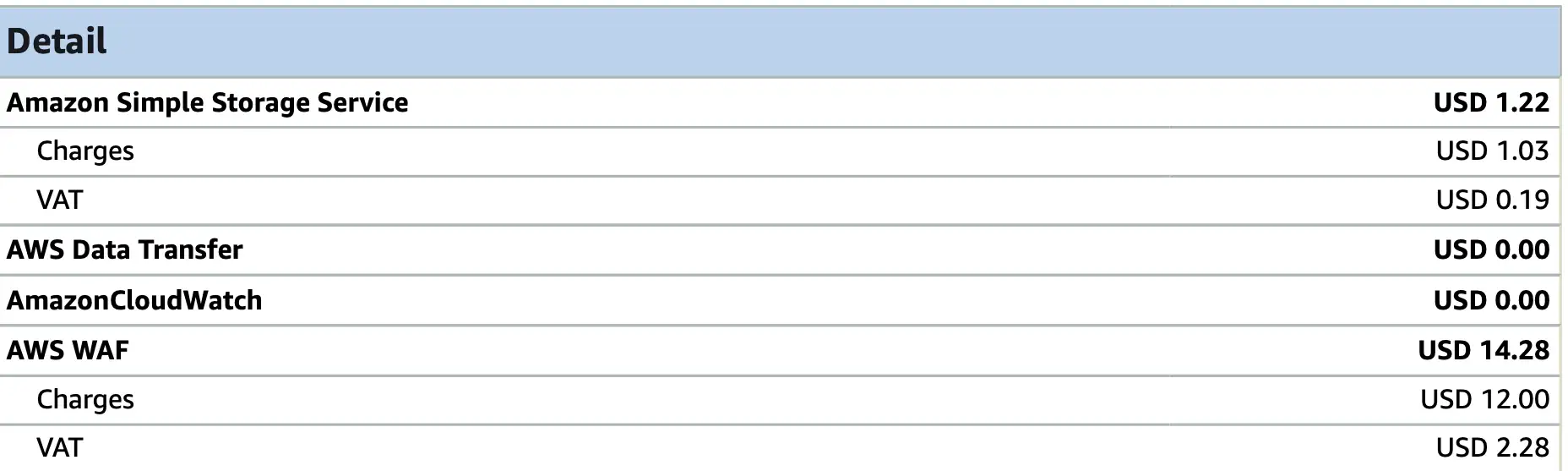

After all those struggles and learnings, I got the project working 🎉 But costs went up 😒, especially from WAF, which consumed more than 50% of the monthly bill of the account.

On the next AWS user group, I asked how others would approach this or if they had already faced the challenge, and Raphael Manke asked me: “Why don’t you do it with a basic auth?”. Sure thing and done. So I implemented basic auth for CloudFront inspired by the following blog post and deleted the WAF rules and associations. However, to clean up, I needed to delete the WAF stacks in us-east-1 manually afterward, as I removed them from the code. I am still a lot in terraform mode, which realizes this automatically. Step-by-step.

So my CloudFront function is now in the /src/lambda/index-${buildstage}.js file, as I only need the Basic Auth for the Development stage, and it has the following content.

function handler(event) {

var request = event.request;

var uri = request.uri;

var authHeaders = request.headers.authorization;

// The Base64-encoded Auth string that should be present.

// It is an encoding of `Basic base64([username]:[password])`

// The username and password are:

// Username: john

// Password: foobar

var expected = "Basic am9objpmb29iYXI=";

// If an Authorization header is supplied and it's an exact match, pass the

// request on through to CF/the origin without any modification.

if (authHeaders && authHeaders.value === expected) {

// Check whether the URI is missing a file name.

if (uri.endsWith('/')) {

request.uri += 'index.html';

}

// Check whether the URI is missing a file extension.

else if (!uri.includes('.')) {

request.uri += '/index.html';

}

return request;

}

// But if we get here, we must either be missing the auth header or the

// credentials failed to match what we expected.

// Request the browser present the Basic Auth dialog.

var response = {

statusCode: 401,

statusDescription: "Unauthorized",

headers: {

"www-authenticate": {

value: 'Basic realm="Enter credentials for this super secure site"',

},

},

};

return response;

}

and, of course, in the pipeline URL check I needed to switch to

pipepline.addStage(hugoPageDevStage, {

post: [

new pipelines.ShellStep('HitDevEndpoint', {

envFromCfnOutputs: {

URL: hugoPageDevStage.staticSiteURL,

},

// now we use Basic auth here (still hardcode IK) 👇🏽

commands: ['curl -Ssf -H "Authorization: Basic am9objpmb29iYXI=" $URL'],

}),

],

});

With this improvement I was able to remove the cost for WAF of 12$ month, which are a lot if you consider that

Route53, Cloudfront and S3 storage and GET requests are in total around 1$ per month.

Conclusion

It was a very nice learning project to dig deeper into cdk, its concepts, and the community. Some highlights

- using the

cdk-pipelinespackage to have a self-mutating pipeline out-of-the-box - building a hugo multi-environment setup

- digging through

CloudFrontand especially the connection withWAFv2 - and furthermore, how to connect everything in the

CodePipeline

No worries if a simple basic-auth mechanism replaced the exploration and work with WAF connecting to CloudFront. The learnings on the way were golden, and this was the project’s purpose from my side.

Next steps

The next steps for me are creating the following:

- make a GitHub template repository for this setup with

projenand add tests. For example for this call:

const zone = HostedZone.fromLookup(this, 'my-hosted-zone', {

domainName: 'test.my.com',

});

- Build my first L2 construct for WAFv2 and release it to npm. Learn from it and expand it.

Update 2023-08-14

As mentioned the setup had too many steps so I decided to wrap it into a projen template.

Like what you read? You can hire me 💻, book a meeting 📆 or drop me a message to see which services may help you 👇